First and foremost, a big thank you for being a valued member of the Let's Talk Text community! Each week, I aim to break down the vast world of NLP into digestible, enlightening, and actionable insights for you. As I continue to evolve this newsletter and bring the best content to you, understanding your experience is crucial. I’ve crafted a feedback form. It's short, it's sweet, and it's the perfect way for you to shape the future editions of Let's Talk Text. Your voice matters to me, and every response will play a part in enhancing your reading experience.

CLICK HERE TO ACCESS FEEDBACK FORM (takes less than 15 seconds)

Gorilla

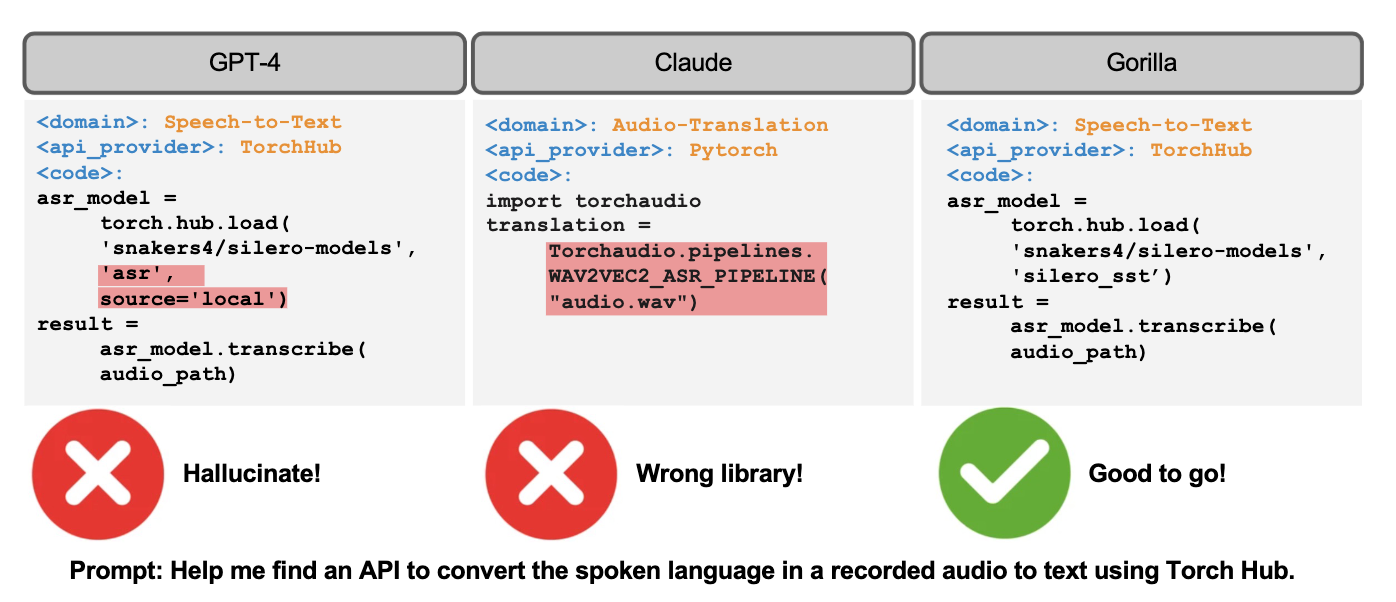

Gorilla, the latest open-source model from researchers at Berkeley and Microsoft, blows the API calling capabilities of previous LLMs out of the water. Given a natural language query, Gorilla can write a semantically- and syntactically- correct API to invoke. With Gorilla, you can talk to an LLM that is able to access 1,600+ (and growing) API calls accurately with less hallucination than GPT-4. Check out the paper here.

If you haven’t read about Toolformer, a previous API calling model we’ve covered on Let’s Talk Text, I suggest you look at that first so you can appreciate how large of a jump in capability Gorilla provides.

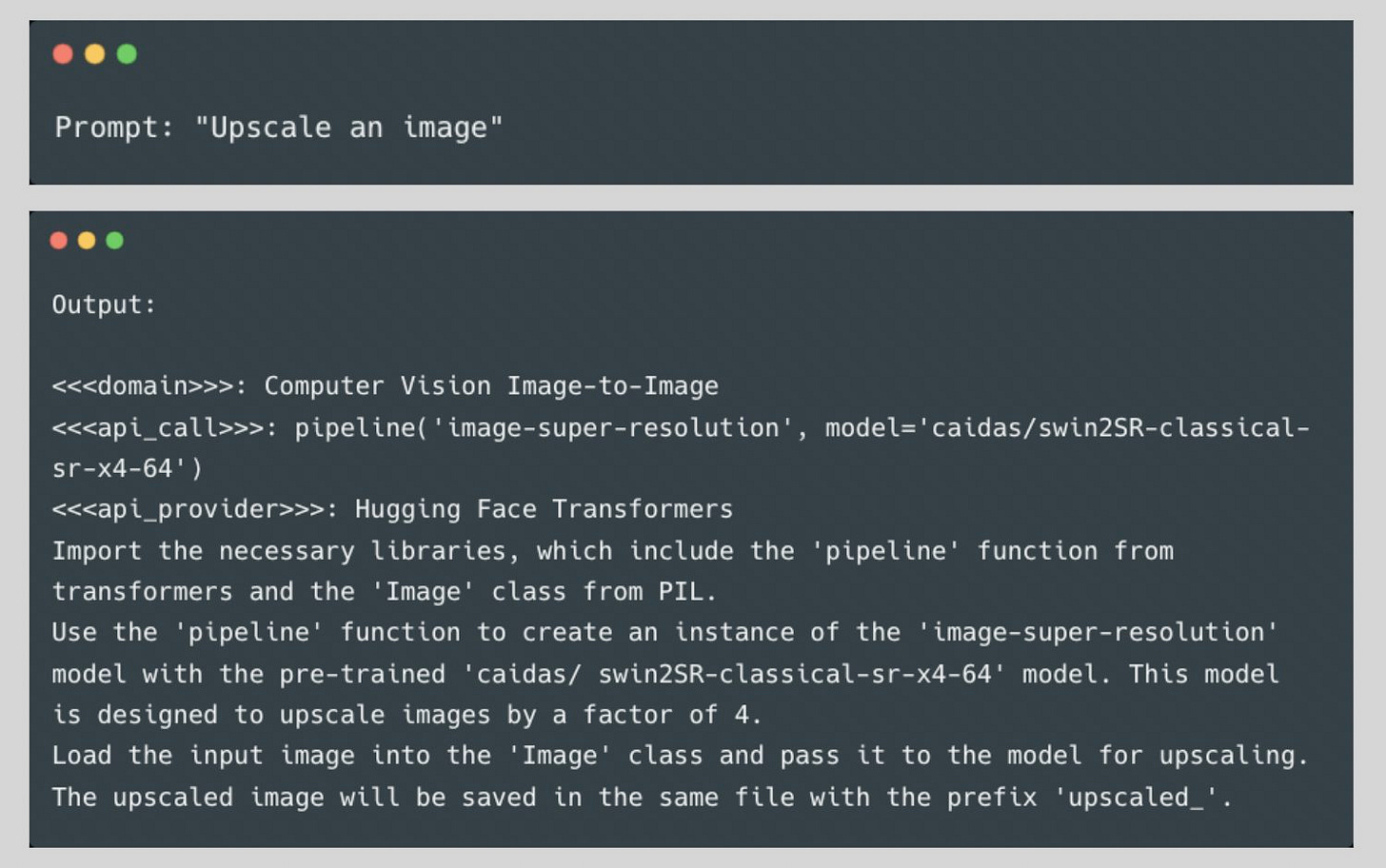

You can also interact with Gorilla in a Google Colab notebook with just a few lines of code here. One of my favorite examples is when Gorilla is asked to upscale an image and Gorilla (correctly) writes all the code to load and run a model from HuggingFace:

As a summary of the LLMs and why Gorilla is important:

Generative models are starting to get really good at general purpose work. Check out the last issue on LLaMA 2 and how well it performs on NLP benchmarks.

Tool usage is something that LLMs still struggle with:

They often hallucinate the wrong API call

They are unable to consistently generate accurate input arguments

Allowing LLMs to invoke a vast space of changing cloud APIs could transform LLMs into the primary interface to the internet. Tasks ranging from booking an entire vacation to hosting a conference, could become as simple as talking to an LLM that has access to the flight, car rental, hotel, catering, and entertainment web APIs.

The main benefits of having API calling LLMs include:

Access vastly larger and changing knowledge bases

Access search technologies to augment knowledge

Achieve complex computational tasks

The authors of the paper constructed a benchmark for the task called APIBench which scrapes API documentation from TorchHub, TensorHub, and HuggingFace. Gorilla, the finetuned LLaMA-7b model significantly outperforms GPT-4 in terms of accuracy and reducing hallucination errors across the dataset.